-

Posts

13,238 -

Joined

-

Last visited

-

Days Won

8

Profile Information

-

Member Title

Erfahrener Benutzer

-

Country

USA

Converted

-

City

Denver

Recent Profile Visitors

The recent visitors block is disabled and is not being shown to other users.

-

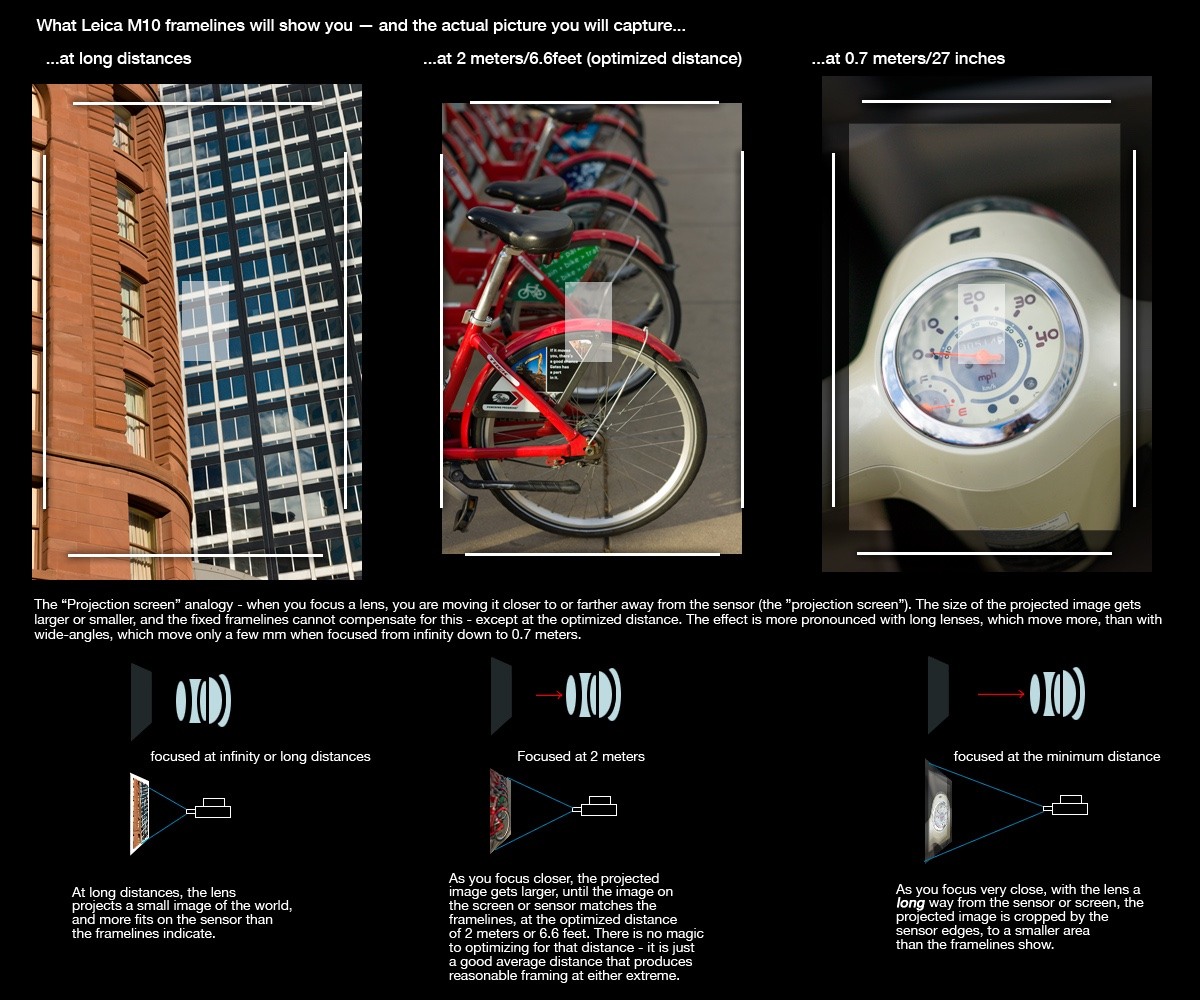

Well, the term "focus breathing" has been used confusingly to mean two opposite things - but assuming you mean "lenses without focus-breathing do NOT show FoV changes as they focus closer or farther"- probably no M lenses at all. It is well-known, for example, that unit-focusing lenses (all the elements move together as one unit) change the field of view the more they are extended. And the "fixed-area" M framelines make this obvious. The lines are only perfectly accurate at one focus distance (which has varied over the decades, but is currently 2 meters/6.6 feet). As I documented when questions arose at the intro of the M10. Example pictures made with the 75mm APO-Summicron-M. This lens clearly "breathes" when focused at different distances. Most lenses that avoid focus-breathing use internal focus - the lens overall does not move or change length, just the glass elements inside, sliding around relative to one another. The SL lenses being an example (and quite possibly the S and Q also). But internal focusing does not work with the M's rangefinder system - which counts on the total movement of the whole lens and its RF cam, to align the double-images in the RF patch. So M lenses do not use internal focusing. At most they have slightly-moving "floating elements" to improve image quality (not size/magnification) when focused close. OTOH, wide-angle unit-focusing lenses move LESS than long focal lengths. And therefore the wider the lens (18-24-21mm, or the "zoom" 16-18-21), the less focus breathing will occur. A 21mm unit-focus lens may only move a couple of mm to focus all the way from infinity to 0.7m (changing the field of view, or "breathing," only slightly), while a 135mm may move (and "breathe") ~10 times as much.

- 1 reply

-

- 4

-

-

-

A 21 has been an important tool for many of the great Leica M documentary photographers........... I would not give up on it too quickly. So important for Paul Fusco (LOOK magazine and Magnum) that he even used it for his "self-portrait at work." (click photos in the link for a slide show - much of it 21mm). https://www.magnumphotos.com/newsroom/paul-fusco-1930-2020/ Ken Heyman was invited to work with his Leica 21 for anthropologist Margaret Meade, and US president Lyndon Johnson, for several books. He said LBJ's This America was 90% 21mm pictures. https://www.kenheyman.com/index https://www.kenheyman.com/p/books Jill Freedman used a Leica with 21 and 35 for her book Firehouse - earned her an exhibit on the walls of the International Center of Photography (84 Ludlow St, NYC). The book features fire companies Ladder 61 and Engine 66 just off Bartow Ave. in The Bronx. https://fansinaflashbulb.wordpress.com/2018/03/23/jill-freedman-firehouse/ Earned her other "rewards" as well..... https://chromafineartgallery.com/product/fh56/ Personally, I've used a 20 or 21 as a documentary mainstay since 1977 - the very first lens I've acquired in every system since then. Inspired originally by that Fusco picture of the grape-harvester framed by vines (see first link), and reinforced by Freedman's firefighter pictures. I realized that "THIS is the way to put viewers right in the middle of the action, effectively." Even a 24/28 is too "stand-offish" for me. Occupy Denver March, 2011. M9, 21mm Elmarit.

-

I have never found focus shift to be a problem. For the simple reason that I cannot imagine any reason at all on Bog's Green Earth to use a lens "stopped down just a little." Either I need maximum aperture for low-light journalism or maximum action-stopping shutter speed (the f/1.4 or f/2.0 I paid for). Or I want the best overall performance across the whole image (corners plus sufficient DoF). Traditionally, at 3-4 stops down = f/4.8-f/6.8. The one exception to that was using the non-ASPH M-mount 35 f/1.4s (Summilux or Nokton), which I used as pseudo-Summicrons at f/2 in low light because they were far too "dreamy" at f/1.4. And fortunately the copies I had of those were calibrated for f/2.0 rangefinder focusing anyway.

- 13 replies

-

- 1

-

-

- 35mmf1.4

- summilux35mm

-

(and 2 more)

Tagged with:

-

Voigtlander Color Skopar M lenses to L39/LTM, is there such a flange?

adan replied to Smudgerer's topic in Leica M Lenses

It is really the cameras that are different - thus requiring different lenses. M39 cameras are 1mm thicker. When Leica designed the M cameras, they reduced the camera flange distance by one 1mm, exactly so that a legacy M39 lens plus adapter thickness of 1mm would work like the native M lenses. But native M-mount lenses cannot be retrofitted in the other direction, for use on M39 cameras. Except for the few (as lct notes) that had a dual-mount built right in at the factory (long before Cosina came along). M39 lenses can be "stepped up" 1 mm (via adapter) to use on M cameras. M lenses cannot be "stepped down" 1mm to use on M39 cameras. No such thing as an adapter with a negative thickness (less than zero 😉 ). Technically, if you are willing to permanently grind several mm of metal off the back of the M-mount C/V lens(es) - and then permanently mount an M39 thread-mount - and calibrate it, and bench-test the result for correct focusing - and pay a machine-shop for the service, that might be possible. Otherwise, it is an "one-way street." (Canon had the reverse problem with the change from FD-manual to EOS-EF-autofocus lenses. No way to "grandfather" FD lenses to fit on an EOS body, without losing the ability to focus all the way to infinity - they become macro-only lenses. Except via an adapter that is also a 1.26x or 1.4x teleconverter, where the glass can compensate for the back-focus mismatch. Probably not something you want with super-wides, which would lose much of their "wideness.") -

-

I had a chance to compare the Double Aspherical (back when it was "only" $4500 used - c.2003) with the successor ASPH v.1. Main imaging differences were: - At f/1.4, the AA was sharper than the ASPH in the center, but softer in the corners. Standard "journalistic" Leica lens design as practiced by Dr. Mandler (who had retired just before the Aspherical project began). - The ASPH was more evenly "sharpish" across the whole frame at f/1.4. - The AA was faintly "warmer/yellower" in color rendering. the APSH was a bit pinker. Had the price been the same (hah!), I would have preferred the AA. The main feature both shared was significantly less "glow" and corner-coma "butterfly-wings" at larger apertures than the then-existing pre-ASPH/Aspherical 35 Summilux-M (and Summicron-Ms). They behaved like "normal" lenses - no drama or dreaminess; "just the facts, ma'am." The sole reason for the stratospheric used prices of the AA was/is purely due to rarity-value/collectability. Not the image quality, one way or the other. The hand-ground aspheric elements had a very high discard/wastage rate, and thus were so expensive that Leica was losing money on every lens, at a selling-price the market would accept (similar to the original hand-ground 50mm f/1.2 Noctilux aspherical). Leica tried again with the ASPH, once they had developed (with Hoya) the techniques of moulded ASPHs.

-

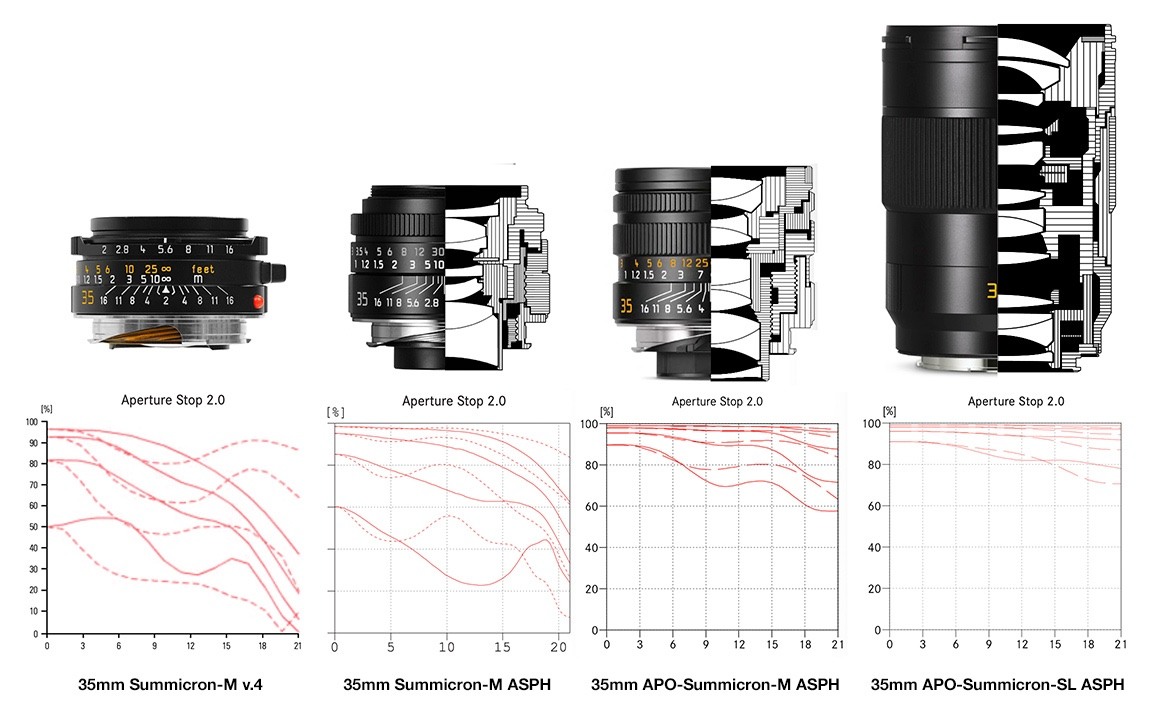

You want explicit? Here is explicit! Size (and by extension, roughly weight) vs. MTF performance. Certainly AF plays a role also, but if it were the only factor, the APO-Summicron-SL could be a lot shorter. (BTW, the Summicron-M v.4 will always be my choice - but facts are facts.)

- 58 replies

-

- 13

-

-

-

THESE are quite interesting! Reach out with your hand, and cover their faces from the top of the eyes down. You will then see the mystical third and fourth "eyes" on their foreheads - always open and perceiving. Something Tut has as well. Very enlightened creatures, cats. 😉 Ommmmm!

-

Intriguing. There is no way any of my M lenses would do that on any of my M(10) bodies, without some force applied by hand. I would check and manipulate the following, with the troublesome lenses off the camera but available for inspecting/testing themselves. The "rotary force" that is acting on the lens to make it "dismount" likely comes from the frameline-selection system (both the manual-selection lever on the front, and the internal connections to the viewfinder), which are spring-loaded. The default "no lens mounted" position is for 135, 35, and 24mm lenses. Mounting 50/75 and 21/28/90 lenses applies force to move the FLs out of the default position, and hold them in another position to frame correctly with those lenses (well the 21 wouldn't actually be framing anything, but it will produce the same movement). That force may be higher than normal for some reason, and therefore "kicking back," with the effect of pushing the lens counterclockwise towards disaster (should not happen). Try flipping the manual frame selector lever and see if it seems stiff or heavy to shift, when moving it back and forth. 50/75 lenses produce medium "kickback force" - 21/28/90 produce more kickback force. I am making an educated guess that the problem lenses are in those categories However... The camera's lens mount itself has pressure-springs to push the lens forward against the camera's mount-flanges from the inside when mounted - precisely to provide additional friction to mate the lens to the camera firmly, and prevent the lens from slipping counterclockwide to unmount without a hand turning it. If the lens moves TOO easily when being mounted/unmounted, or wobbles even the slightest amount once clicked into place on the camera - those friction-producing springs may be worn out and too weak to do their job - i.e. press the lens flanges tightly against the camera flange. Or the camera's flange surface may be worn or slippery. Or the silver mount surface on the back of the lens(es) themselves may be worn down a bit or otherwise slippery (accumulated grease or metal dust). Checking all those may at least give a hint as to what needs service.

-

Just to be clear, the M EV1 cannot legally even be operated in the US until it has actual FCC certification. From Leica's own order page: And that would also apply to foreign tourists (if any these days ) using their M EV1 on US territory (includes USVI, Puerto Rico, Guam, Northern Marianas, American Samoa). Odds of discovery or detection are, of course, extremely low - but all it takes is one (1) officious official (customs, or other law enforcement) to check the database of FCC-certified devices, for that to turn into a headache. BTW - I have no doubt that the EV1 is fully compliant with FCC radio-wave emissions rules. But only the FCC's final ruling will count.....

-

Leica M EV1: The first M with EVF instead of Rangefinder

adan replied to LUF Admin's topic in Leica M EV1

I think one has to understand that "normal" product economics do not apply to all products. "Cost of production + X" does not always apply. See, for example, Veblen Goods: https://en.wikipedia.org/wiki/Veblen_good Becoming a "Leica M" owner in the 21st century, to some extent, means "gaining entre to an exclusive club," not just buying a camera. And as with other exclusive clubs, there is an Initiation Fee. Basically a test of "Are you really rich enough to hob-nob with the rest of us** (as well as help maintain the club's cachet)?" Apparently about a €7000 "cover charge" or "pay to play," these days. I really kinda giggled to myself all through the past 9 months of discussion, reading the expectations that "an EVF M will be a cheaper M." Or "an even fancier M." But I figured no one would want to believe me ahead of the reality - and Leica could have surprised me (heck, RR probably could produce a €120K auto, if they wanted) - so I kept mum. _______________ **Not my personal attitude - I don't "do" clubbishness or conspicuous consumption. I don't use Ms because I am rich - but in order to become rich(er). -

"Vox Populi, vox Dei." Most Murcan drivers can't be arsed to learn a clutch-and-stick (just as, it appears, some Leica-M wannabees can't be arsed to learn how to use a rangefinder. 😜 ) (However physical disabilities, also as with the M-EV1, understandably play a role). There are some Murcan manual-shift cars still made - but realistically, they simply do not sell well overall, so are now the "luxury" option (which will typically NOT include rental fleets, except by special request). Can't stay in business making things people won't buy. https://www.caranddriver.com/features/g20734564/manual-transmission-cars/?utm_source=google&utm_medium=cpc&utm_campaign=dda_ga_cd_md_bm_prog_org_us_g20734564&gad_source=1&gad_campaignid=19550043290&gbraid=0AAAAACfH9WhVUnC_S5V7CS9Lux8ssYXGX&gclid=EAIaIQobChMI_rG4l-HbkAMVtjWtBh1plxKREAAYASAAEgK7tvD_BwE Might be worth noting there are no Stellantis/Chrysler cars on that list, except Jeeps. And Stellantis is headquartered in............Hoofddorp. 😉😁😜🤪 Those danged Dutch!

-

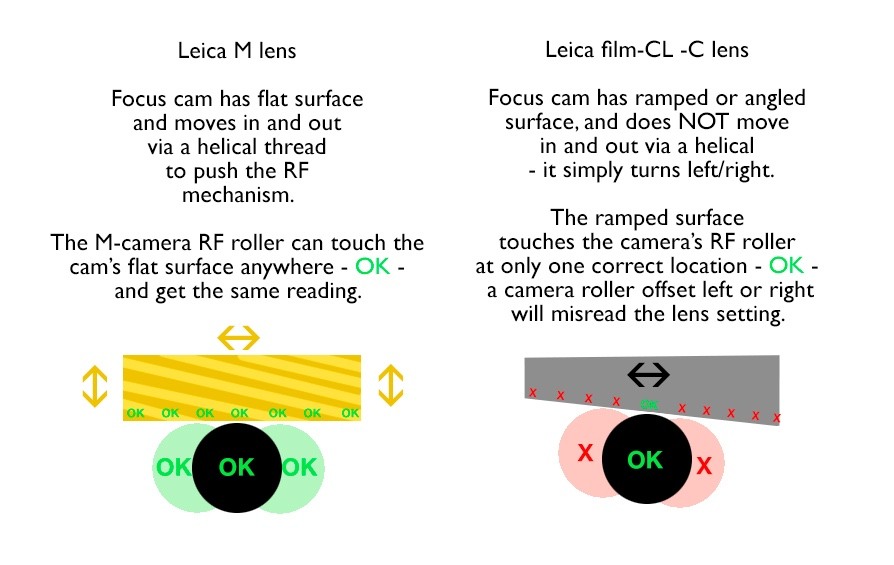

I have no idea. I hope so. Leica has been tightening tolerances all across its products with every passing year (and decade). That was one of the main reasons given for building the all-new factory in Wetzlar in 2014. The essential problem is the tolerances for the left/right positioning of the camera's RF contact roller. With M lenses on M cameras, that tolerance could have some leeway left and right, because the entire focus cam surface is flat and even. And that surface moving in and out on a helix thread, to move the RF images around, moves the RF roller. It does not matter where the camera roller makes contact with the lens cam. With the -C lenses on the CL camera, with an entirely different RF, some money was saved by leaving out the cam's moving helix and and flat surface. Instead, their cam did NOT move in and out and was NOT flat - it had a sloped or ramped surface that got higher or lower, rather than actually moving in and out, as the lens turned for focusing. And that required the lens roller to always be in the exact same place, in every camera that came from Minolta's factory. The M camera rollers of the time did not need that left-right precision, so long as they used M lenses with the flat, wide cam surface.

-

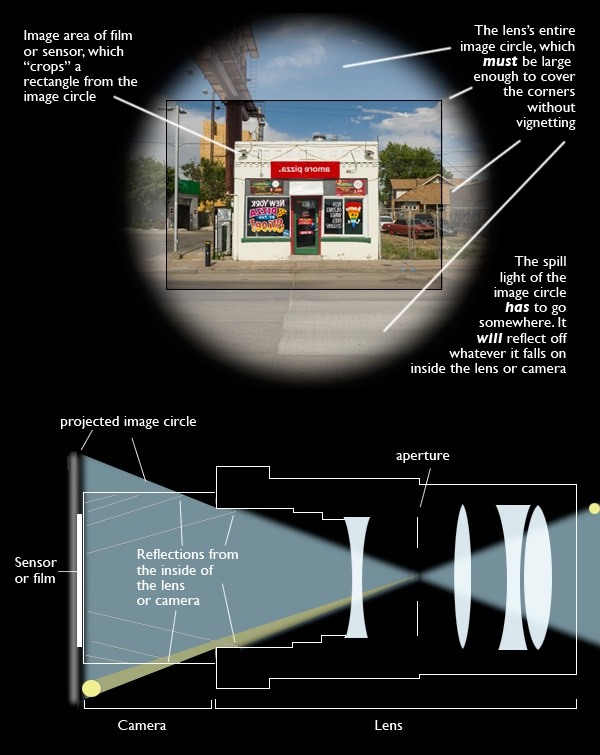

Square (or more commonly, rectangular) lens shades were developed for and by the motion picture industry - 90 years ago or more. https://cinemagear.com/details/fox-cine-simplex.html https://www.antiquephotographica.info/Mitchell Camera Corporation Matte Box No. 982 West Hollywood Web Page 10-3-2019.htm They also serve as matte-boxes for special effects (e.g. split-screen shots where an actor could also play his own twin brother). Just required adding a cropping mask to the front. Or as filter mounts. Leica had a few also in the 1930s: https://wiki.l-camera-forum.com/leica-wiki.en/index.php?title=XIOOM https://wiki.l-camera-forum.com/leica-wiki.en/index.php?title=SOOMP Cameras that actually take square pictures (Hasselblad, Rollei, Yashica, etc) do provide truly "square" lens hoods. A Rollei square hood for their 1932 camera: https://onlinedarkroom.blogspot.com/2016/12/rolleiflex-old-standard-review.html The advent of fisheye lenses (~1970), with extremely wide fields of view, brought the creation of the "tulip" lens hood - a square opening (as projected out into 3D space) cut into a circular tube of metal. https://wiki.l-camera-forum.com/leica-wiki.en/index.php?title=16mm_f/2.8_Fisheye-Elmarit-R Rectangular hoods - in theory - can crop out excess light right down to the edges of the rectangular picture (film, sensor) area, doing the best possible job of avoiding flare artifacts. Round hoods, like round lenses, let a lot of useless light into the camera (the full "image circle"). If they don't, they will cause vignetting (dark shadows in the picture corners). But round lens hoods have historically been much easier (i.e. cheaper) to manufacture - buy a hollow metal tube right from the metal foundry, cut off the right length needed, machine some threads on the back, screw it on your lens. And, of course, can be build right into a round lens, to telescope Many companies stayed with round hoods into the AF era (1990s) - or even today. (see: Leica's own current 50mm lenses)